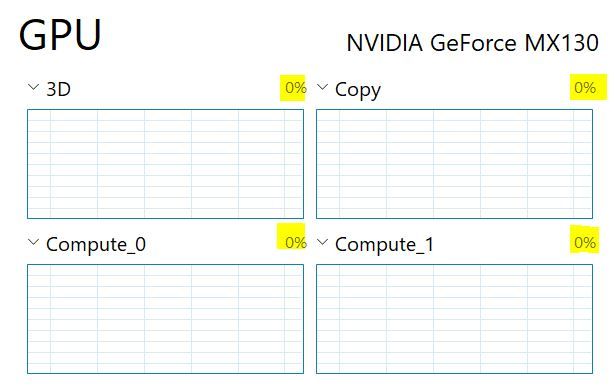

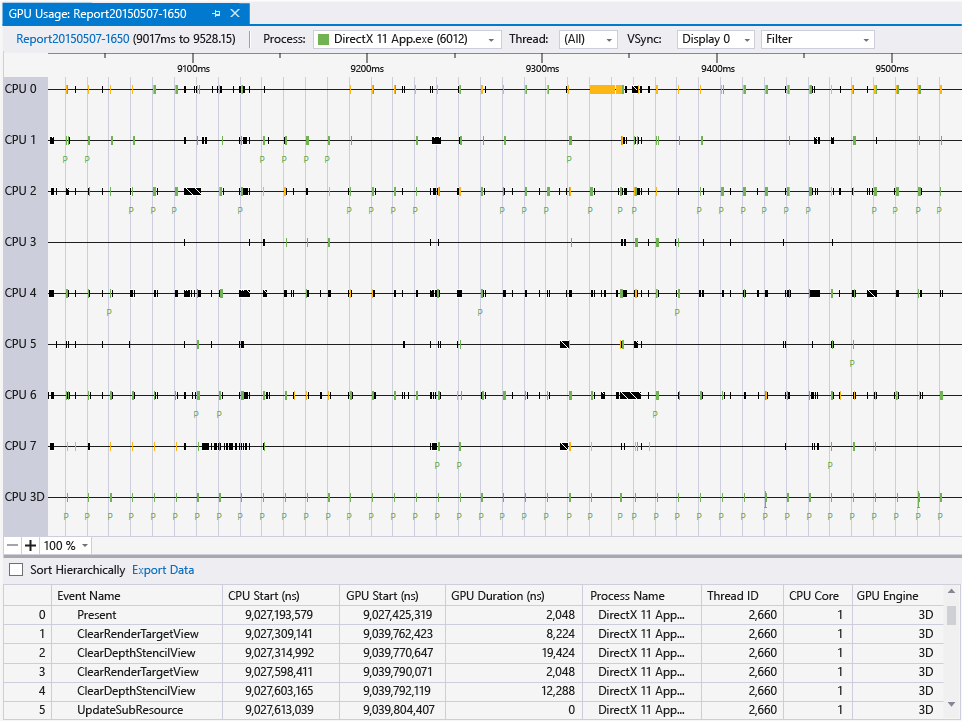

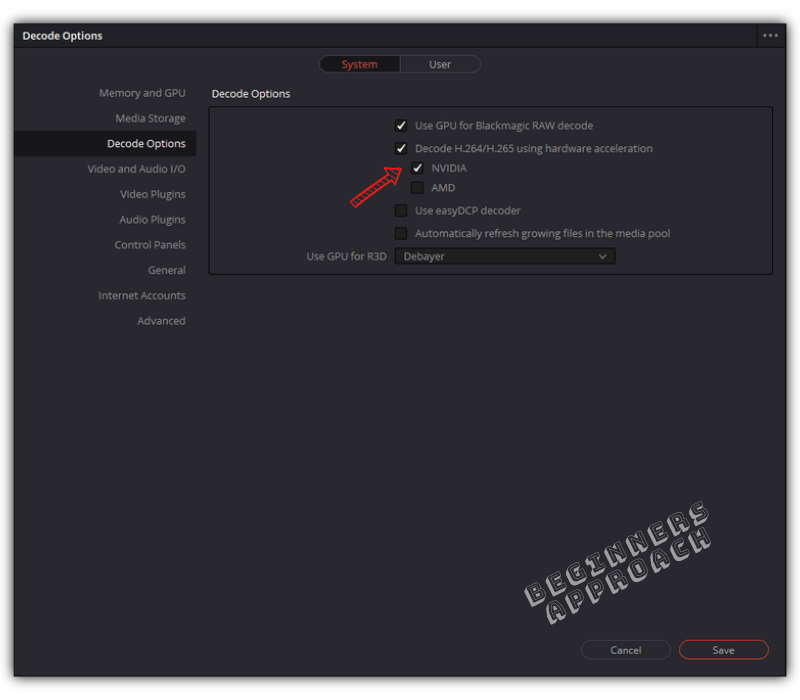

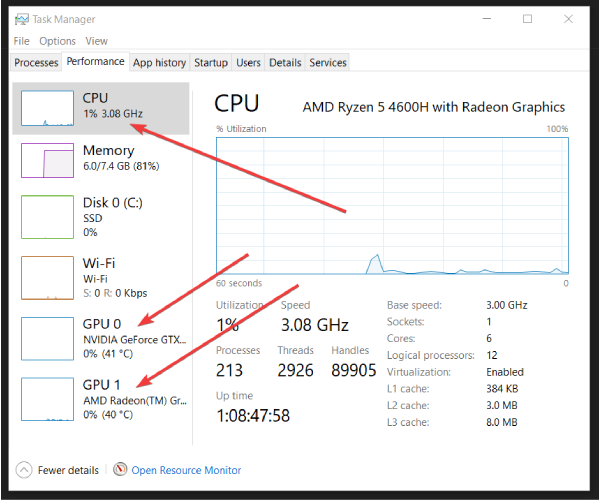

I noticed that every broswer including Edge doesn't use GPU to processing video on youtbe cause my CPU loaded at high usage. My laptop specs is i5-6300u with HD520 igpu with lastest

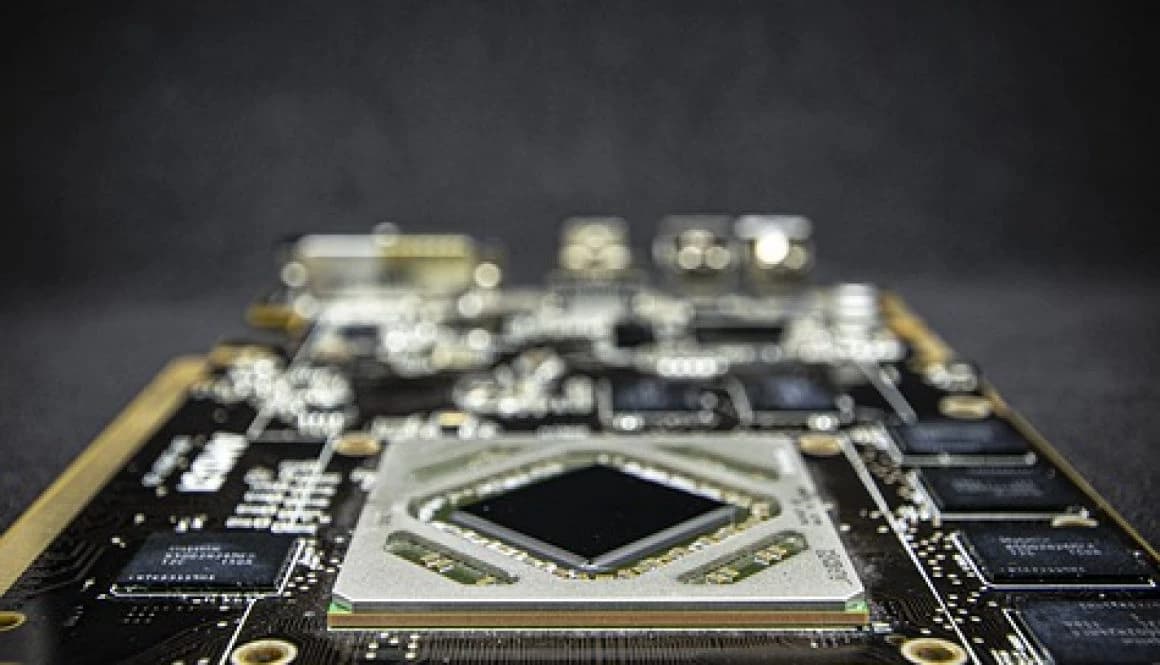

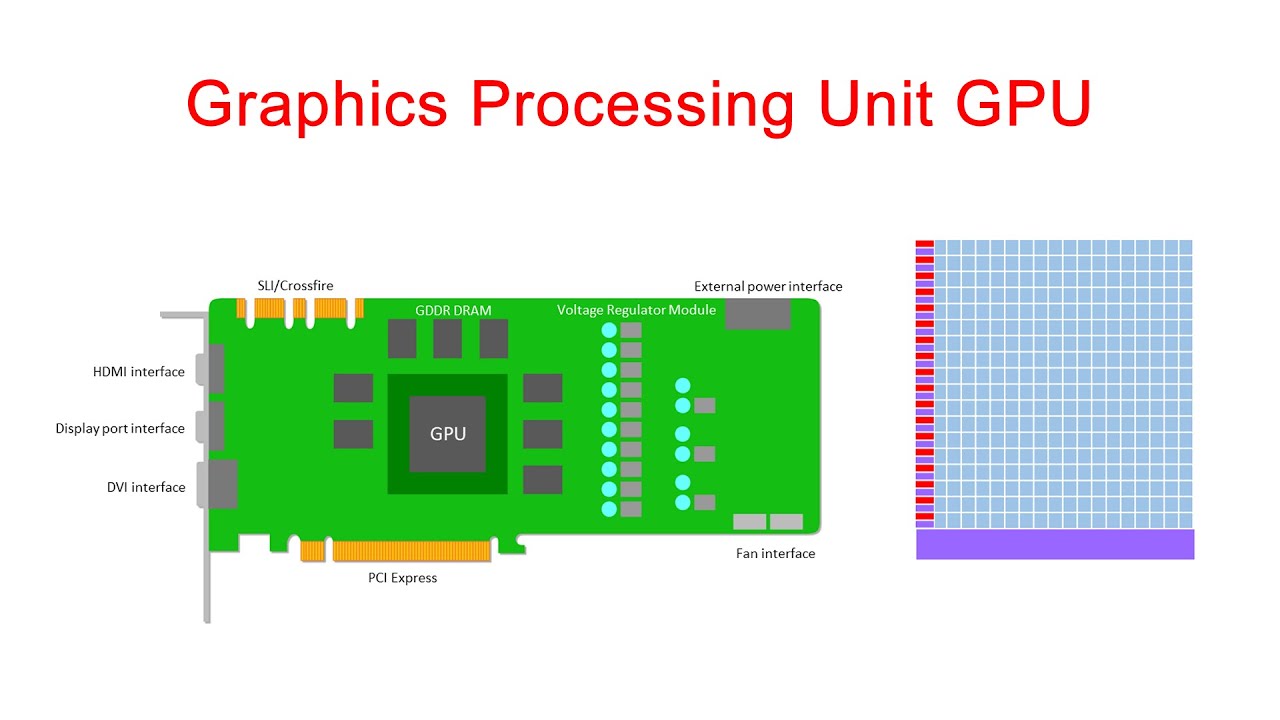

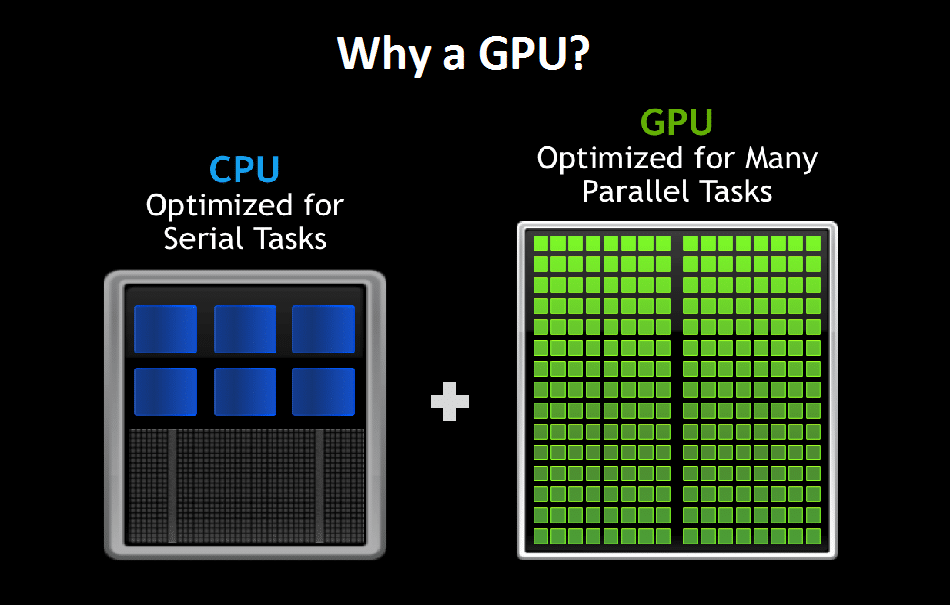

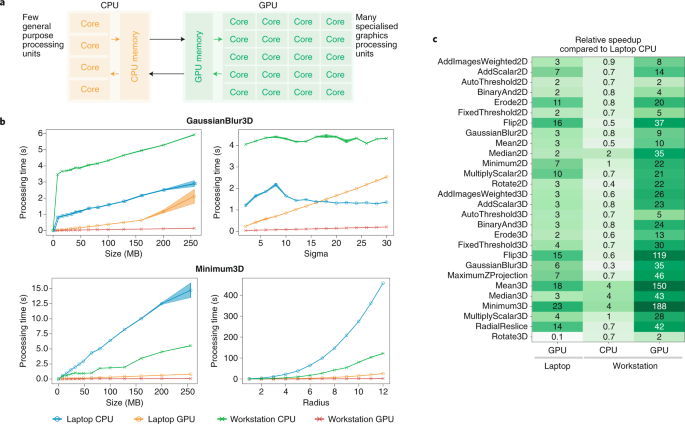

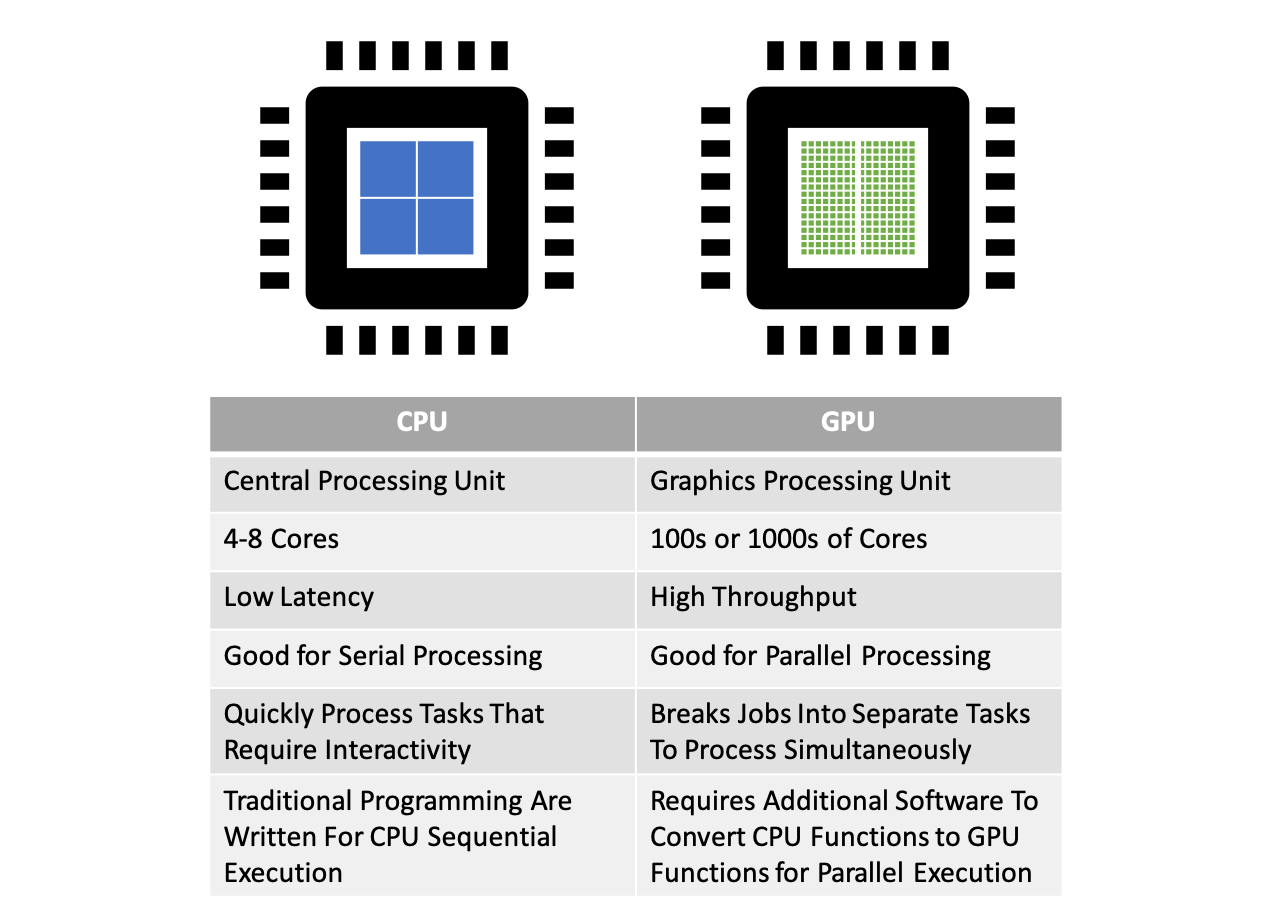

Parallel Computing — Upgrade Your Data Science with GPU Computing | by Kevin C Lee | Towards Data Science

![Hands-on: DaVinci Resolve's eGPU-accelerated timeline performance and exports totally crush integrated GPU results [Video] - 9to5Mac Hands-on: DaVinci Resolve's eGPU-accelerated timeline performance and exports totally crush integrated GPU results [Video] - 9to5Mac](https://9to5mac.com/wp-content/uploads/sites/6/2018/04/davinci-resolve-14-gpu-egpu-selection-imac-pro.jpg?quality=82&strip=all)